A new study published in Nature by University of Cambridge researchers just dropped a pixelated bomb on the entire Ultra-HD market, but as anyone with myopia can tell you, if you take your glasses off, even SD still looks pretty good :)

I have 65" 4K TV that runs in tandem with Beelink S12 pro mini-pc. I ran mini in FHD mode to ease up on resources and usually just watch streams/online content on it which is 99% 1080p@60. Unless compression is bad, I don’t feel much difference. In fact, my digitalized DVDs look good even in their native resolution.

For me 4K is a nice-to-have but not a necessity when consuming media. 1080p still looks crisp with enough bitrate.

I’d add that maybe this 4K-8K race is sort of like mp3@320kbps vs flac/wav. Both sound good when played on a decent system. But say, flac is nicer on a specific hardware that a typical consumer wouldn’t buy. Almost none of us own studio-grade 7.1 sytems at home. JBL speaker is what we have and I doubt flac sounds noticeably better on it against mp3@192kbps.

Yeah, when I got my most recent GPU, my plan had been to also get a 4k monitor and step up from 1440p to 4k. But when I was sorting through the options to find the few with decent specs all around, I realized that there was nothing about 1440p that left me dissapointed and the 4k monitor I had used at work already indicated that I’d just be zooming the UI anyways.

Plus even with the new GPU, 4k numbers weren’t as good as 1440p numbers, and stutters/frame drops are still annoying… So I ended up just getting an ultra-wide 1440p monitor that was much easier to find good specs for and won’t bother with 4k for a monitor until maybe one day if it becomes the minimum, kinda like how analog displays have become much less available than digital displays, even if some people still prefer the old ones for some purposes. I won’t dig my heels in and refuse to move on to 4k, but I don’t see any value added over 1440p. Same goes for 8k TVs.

Interestingly enough, I was casually window browsing TVs and was surprised to find that LG killed off their OLED 8K TVs a couple years ago!

Until/if we get to a point where more people want/can fit 110in+ TVs into their living rooms - 8K will likely remain a niche for the wealthy to show off, more than anything.

Quality of the system is such a massive dependency here, I can well believe that someone watching old reruns from a shitty streaming service that is upscaled to 1080p or 4k by their TV they purchased from the supermarket with coupons collected from their breakfast cereal is going to struggle to tell the difference.

Likewise if you fed the TVs with a high end 4k blu ray player and any blu ray considered reference such as Interstellar, you are still going to struggle to tell the difference, even with a more midrange TV unless the TVs get comically large for the viewing distance so that the 1080p screen starts to look pixelated.

I think very few people would expect their old wired apple earphones they got free with their iphone 4 would expect amazing sound from them, yet people seem to be ignoring the same for cheap TVs. I am not advocating for ultra high end audio/videophile nonsense with systems costing 10s of thousands, just that quite large and noticeable gains are available much lower down the scale.

Depending what you watch and how you watch it, good quality HDR for the right content is an absolute home run for difference between standard 1080p and 4k HDR if your TV can do true black. Shit TVs do HDR shitterly, its just not comparable to a decent TV and source. Its like playing high rez loss less audio on those old apple wired earphones vs. playing low bitrate MP3s.

After years of saying I think a good 1080p TV, playing a good quality media file, looks just as good on any 4k TV I have seen, I now feel justified…and ancient.

Same.

Also, for the zoomers who might not get your reference to the mighty KLF:

An overly compressed 4k stream will look far worse than a good quality 1080p. We keep upping the resolution without getting newer codecs and not adjusting the bitrate.

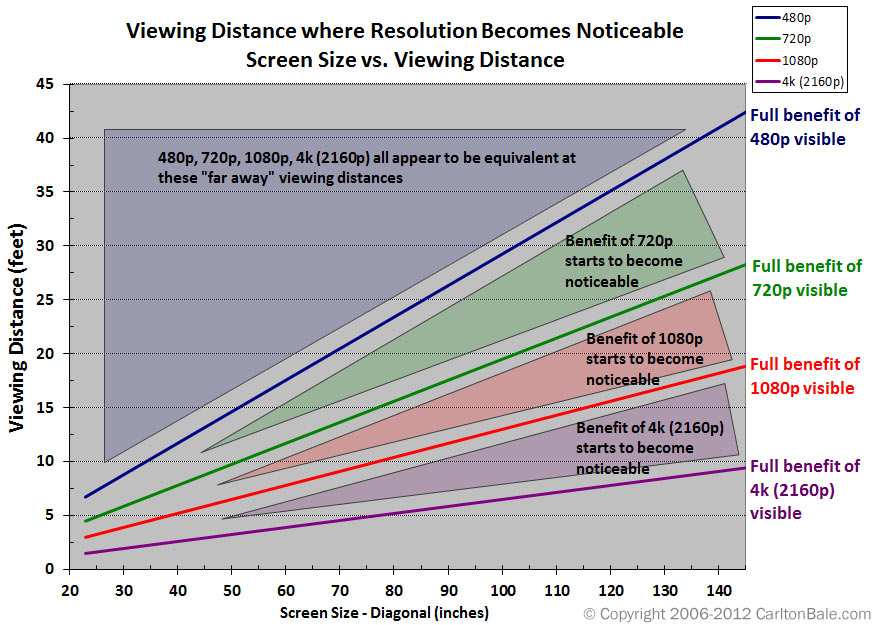

This is true. That said, if can’t tell the difference between 1080p and 4K from the pixels alone, then either your TV is too small, or you’re sitting too far away. In which case there’s no point in going with 4K.

At the right seating distance, there is a benefit to be had even by going with an 8K TV. However, very few people sit close enough/have a large enough screen to benefit from going any higher than 4K:

Source: https://www.rtings.com/tv/learn/what-is-the-resolutionI went looking for a quick explainer on this and that side of youtube goes so indepth I am more confused.

I’ll add another explanation for bitrate that I find understandable: You can think of resolution as basically the max quality of a display, no matter the bitrate, you can’t display more information/pixwls than the screen possess. Bitrate, on the other hand, represents how much information you are receiving from e.g. Netflix. If you didn’t use any compression, in HDR each pixel would require 30 bits, or 3.75 bytes of data. A 4k screen has 8 million pixels. An HDR stream running at 60 fps would require about 1.7GB/s of download wihout any compression. Bitrate is basically the measure of that, how much we’ve managed to compress that data flow. There are many ways you can achieve this compression, and a lot of it relates to how individual codecs work, but put simply, one of the many methods effectively involves grouping pixels into larger blocks (e.g. 32x32 pixels) and saying they all have the same colour. As a result, at low bitrates you’ll start to see blocking and other visual artifacts that significantly degrade the viewing experience.

As a side note, one cool thing that codecs do (not sure if literally all of them do it, but I think most by far), is that not each frame is encoded in its entirety. You have, I, P and B frames. I frames (also known as keyframes) are a full frame, they’re fully defined and are basically like a picture. P frames don’t define every pixel, instead they define the difference between their frame and the previous frame, e.g. that the pixel at x: 210 y: 925 changed from red to orange. B frames do the same, but they use both previous and future frames for reference. That’s why you might sometimes notice that in a stream, even when the quality isn’t changing, every couple of seconds the picture will become really clear, before gradually degrading in quality, and then suddenly jumping up in quality again.

For an ELI5 explanation, this is what happens when you lower the bit rate: https://youtu.be/QEzhxP-pdos

No matter the resolution you have of the video, if the amount of information per frame is so low that it has to lump different coloured pixels together, it will look like crap.

On codecs and bitrate? It’s basically codec = file type (.avi, .mp4) and bitrate is how much data is sent per second for the video. Videos only track what changed between frames, so a video of a still image can be 4k with a really low bitrate, but if things are moving it’ll get really blurry with a low bitrate even in 4k.

“File types” like avi, mp4, etc are container formats. Codecs encode video streams that can be held in different container formats. Some container formats can only hold video streams encoded with specific codecs.

ah yeah I figured it wasn’t quite right, I just remember seeing the codec on the details and figured it was tied to it, thanks.

The resolution (4k in this case) defines the number of pixels to be shown to the user. The bitrate defines how much data is provided in the file or stream. A codec is the method for converting data to pixels.

Suppose you’ve recorded something in 1080p (low resolution). You could convert it to 4k, but the codec has to make up the pixels that can’t be computed from the data.

In summary, the TV in my living room might be more capable, but my streaming provider probably isn’t sending enough data to really use it.

I don’t like large 4k displays because the resolution is so good it breaks the immersion when you watch a movie. You can see that they are on a set sometimes, or details of clothing in medieval movies that give away they were created with modern sewing equipment.

It’s a bit of a stupid reason I guess, but that’s why I don’t want to go above 1080p for tv’s.

I’ve been looking at screens for 50+ years, and I can confirm, my eyesight is worse now than 50 years ago.

Kind of a tangent, but properly encoded 1080p video with a decent bitrate actually looks pretty damn good.

A big problem is that we’ve gotten so used to streaming services delivering visual slop, like YouTube’s 1080p option which is basically just upscaled 720p and can even look as bad as 480p.

Yeah I’d way rather have higher bitrate 1080 than 4k. Seeing striping in big dark or light spots on the screen is infuriating

i’d rather have proper 4k.

Stremio

For most streaming? Yeah.

Give me a good 4k Blu-ray though. High bitrate 4k

I mean yeah I’ll take higher quality. I’d just rather have less lossy compression than higher resolution

I was wondering when we’d get to the snake oil portion of the video hobby that audiophiles have been suffering. 8k vs. 4k is the new lossy vs. lossless argument.

Just recently, on this site, someone tried to tell me that there was no audible difference between 128kbps and 360kbps mp3. Insane.

A big problem is that we’ve gotten so used to streaming services delivering visual slop, like YouTube’s 1080p option which is basically just upscaled 720p and can even look as bad as 480p.

YouTube is locking the good bitrates behind the premium paywall and even as a premium users you don’t get to select a high bitrate when the source video was low res.

That’s why videos should be upscaled before upload to force YouTube into offering high bitrate options at all. A good upscaler produces better results than simply stretching low-res videos.

I think the premium thing is a channel option. Some channels consistently have it, some don’t.

Regular YouTube 1080p is bad and feels like 720p. The encoding on videos with “Premium 1080p” is catastrophic. It’s significantly worse than decently encoded 480p. Creators will put a lot of time and effort in their lighting and camera gear, then the compression artifacting makes the video feel like watching a porn bootleg on a shady site. I guess there must be a strong financial incentive to nuke their video quality this way.

This. The visual difference of good vs bad 1080p is bigger than between good 1080p and good 4k. I will die on this hill. And Youtube’s 1080p is garbage on purpose so they get you to buy premium to unlock good 1080p. Assholes

The 1080p for premium users is garbage too. Youtube’s video quality in general is shockingly poor. If there is even a slight amount of noisy movement on screen (foliage, confetti, rain, snow, etc) the the video can literally become unwatchable.

I can still find 480p videos from when YouTube first started that rival the quality of the compressed crap “1080p” we get from YouTube today. It’s outrageous.

Sadly most of those older YouTube videos have been run through multiple re-compressions and look so much worse than they did at upload. It’s a major bummer.

I’ve been investing in my bluray collection again and I can’t believe how good 1080p blurays look compared to “UHD streaming” .

HEVC is damn efficient. I don’t even bother with HD because a 4K HDR encode around 5-10GB looks really good and streams well for my remote users.

I stream YouTube at 360p. Really don’t need much for that kind of video.

360p is awful, 720p is the sweet spot IMO.

The main advantage in 4K TVs “looking better” are…

-

HDR support. Especially Dolby Vision, gives noticeably better picture in bright scenes.

-

Support for higher framerates. This is only really useful for gaming, at least until they broadcast sports at higher framerates.

-

The higher resolution is mostly wasted on video content where for the most part the low shutter speed blurs any moving detail anyway. For gaming it does look better, even if you have to cheat with upscaling and DLSS.

-

The motion smoothing. This is a controversial one, because it makes movies look like swirly home movies. But the types of videos used in the shop demos (splashing slo-mo paints, slow shots of jungles with lots of leaves, dripping honey, etc) does look nice with the motion interpolation switched on. They certainly don’t show clips of the latest blockbuster movies like that, because it will become rapidly apparent just how jarring that looks.

The higher resolution is just one part of it, and it’s not the most important one. You could have the other features on a lower resolution screen, but there’s no real commercial reason to do that, because large 4K panels are already cheaper than the 1080p ones ever were. The only real reason to go higher than 4K would be for things where the picture wraps around you, and you’re only supposed to be looking at a part of it. e.g. 180 degree VR videos and special screens like the Las Vegas Sphere.

-

I just love how all the articles and everything about this study go “Do you need another TV or monitor?” instead of “here’s a chart how to optimize your current setup, make it work without buying shit”. 😅

Selling TVs and monitors is an established business with common interest, while optimizing people’s setups isn’t.

It’s a bit like opposite to building a house, a cubic meter or two of cut wood doesn’t cost very much, even combined with other necessary materials, but to get usable end result people still hire someone other than workers to do the physical labor parts.

There are those “computer help” people running around helping grannies clean Windows from viruses (I mean those who are not scammers), they probably need to incorporate. Except then such corporate entities will likely be sued without end by companies willing to sell new shit. Balance of power.

ITT: people defending their 4K/8K display purchases as if this study was a personal attack on their financial decision making.

Resolution doesn’t matter as much as pixel density.

My 50" 4K TV was $250. That TV is now $200, nobody is flexing the resolution of their 4k TV, that’s just a regular cheap-ass TV now. When I got home and started using my new TV, right next to my old 1080p TV just to compare, the difference in resolution was instantly apparent. It’s not people trying to defend their purchase, it’s people questioning the methodology of the study because the difference between 1080p and 4k is stark unless your TV is small or you’re far away from it. If you play video games, it’s especially obvious.

Old people with bad eyesight watching their 50" 12 feet away in their big ass living room vs young people with good eyesight 5 feet away from their 65-70" playing a game might have inherently differing opinions.

12’ 50" FHD = 112 PPD

5’ 70" FHD = 36 PPD

The study basically says that FHD is about as good as you can get 10 feet away on a 50" screen all other things being equal. That doesn’t seem that unreasonable

Right? “Yeah, there is a scientific study about it, but what if I didn’t read it and go by feelings? Then I will be right and don’t have to reexamine shit about my life, isn’t that convenient”

They don’t need to this study does it for them. 94 pixels per degree is the top end of perceptible. On a 50" screen 10 feet away 1080p = 93. Closer than 10 feet or larger than 50 or some combination of both and its better to have a higher resolution.

For millennials home ownership has crashed but TVs are cheaper and cheaper. For the half of motherfuckers rocking their 70" tv that cost $600 in their shitty apartment where they sit 8 feet from the TV its pretty obvious 4K is better at 109 v 54

Also although the article points out that there are other features that matter as much as resolution these aren’t uncorrelated factors. 1080p TVs of any size in 2025 are normally bargain basement garbage that suck on all fronts.

This study was brought to you by every streaming service.

I can pretty confidently say that 4k is noticeable if you’re sitting close to a big tv. I don’t know that 8k would ever really be noticeable, unless the screen is strapped to your face, a la VR. For most cases, 1080p is fine, and there are other factors that start to matter way more than resolution after HD. Bit-rate, compression type, dynamic range, etc.

Seriously, articles like this are just clickbait.

They also ignore all sorts of usecases.

Like for a desktop monitor, 4k is extremely noticeable vs even 1440P or 1080P/2k

Unless you’re sitting very far away, the sharpness of text and therefore amount of readable information you can fit on the screen changes dramatically.

You should actually read it, they specified what they looked at.

The article was about TVs, not computer monitors. Most people don’t sit nearly as close to a TV as they do a monitor.

Oh absolutely, but even TVs are used in different contexts.

Like the thing about text applies to console games, applies to menus, applies to certain types of high detail media etc.

Complete bullshit articles. The same thing happened when 720p became 1080p. So many echos of “oh you won’t see the difference unless the screen is huge”… like no, you can see the difference on a tiny screen.

We’ll have these same bullshit arguments when 8k becomes the standard, and for every large upgrade from there.

I agree to a certain extent but there are diminishing returns, same with refreshrates. The leap from 1080 to 4k is big. I don’t know how noticeable upgrading from 4k to 8k would be for the average TV setup.

For vr it would be awesome though

So, a 55-inch TV, which is pretty much the smallest 4k TV you could get when they were new, has benefits over 1080p at a distance of 7.5 feet… how far away do people watch their TVs from? Am I weird?

And at the size of computer monitors, for the distance they are from your face, they would always have full benefit on this chart. And even working into 8k a decent amount.

And that’s only for people with typical vision, for people with above-average acuity, the benefits would start further away.

But yeah, for VR for sure, since having an 8k screen there would directly determine how far away a 4k flat screen can be properly re-created. If your headset is only 4k, a 4k flat screen in VR is only worth it when it takes up most of your field of view. That’s how I have mine set up, but I would imagine most people would prefer it to be half the size or twice the distance away, or a combination.

So 8k screens in VR will be very relevant for augmented reality, since performance costs there are pretty low anyway. And still convey benefits if you are running actual VR games at half the physical panel resolution due to performance demand being too high otherwise. You get some relatively free upscaling then. Won’t look as good as native 8k, but benefits a bit anyway.

There is also fixed and dynamic foveated rendering to think about, with an 8k screen, even running only 10% of it at that resolution and 20% at 4k, 30% at 1080p, and the remaining 40% at 540p, even with the overhead of so many foveation steps, you’ll get a notable reduction in performance cost. Fixed foveated would likely need to lean higher towards bigger percentages of higher res, but has the performance advantage of not having to move around at all from frame to frame. Can benefit from more pre-planning and optimization.

A lot of us mount a TV on the wall and watch from a couch across the room.

And you get a TV small enough that it doesn’t suit that purpose? Looks like 75 inch to 85 inch is what would suit that use case. Big, but still common enough.

I’ve got a LCD 55" TV and a 14" laptop. Ok the couch, the TV screen looks to me about as big as the laptop screen on my belly/lap, and I’ve got perfect vision; on the laptop I can clearly see the difference between 4k and FULL HD, on the TV, not so much.

I think TV screens aren’t as good as PC ones, but also the TVs’ image processors turn the 1080p files into better images than what computers do.

Hmm, I suppose quality of TV might matter. Not to mention actually going through the settings and making sure it isn’t doing anything to process the signal. And also not streaming compressed crap to it. I do visit other peoples houses sometimes and definitely wouldn’t know they were using a 4k screen to watch what they are watching.

But I am assuming actually displaying 4k content to be part of the testing parameters.

Yeah well my comparisons are all with local files, no streaming compression

Also, usually when people use the term “perfect” vision, they mean 20/20, is that the case for you too. Another term for that is average vision, with people that have better vision than that having “better than average” vision.

Idk what 20/20 is, I guess you guys use a different scale, last mandatory vision test at work was 12/10 with 6/7 on I don’t remember which color recognition range, but I’m not sure about the latter 'cause it was ok last year and 6/7 the year before also. IIRC the best score for visual acuity is 18/10, but I don’t think they test that far during work visits, I’d have to go to the ophthalmologist to know.

I would imagine it’s the same scale, just a base 10 feet instead of 20 feet. So in yours you would see at 24 feet what the average person would see at 20 feet. Assuming there is a linear relation, and no circumstantial drop off.

There’s a giant TV at my gym that is mounted right in front of some of the equipment, so my face is inches away. It must have some insane resolution because everything is still as sharp as a standard LCD panel.

8K would probably be really good for large computer monitors, due to viewing distances. It would be really taxing on the hardware if you were using it for gaming, but reasonable for tasks that aren’t graphically intense.

Computer monitors (for productivity tasks) are a little different though in that you are looking at section of the screen rather than the screen as a whole as one might with video. So having extra screen real estate can be rather valuable.

The counterpoint is that if you’re sitting that close to a big TV, it’s going to fill your field of view to an uncomfortable degree.

4k and higher is for small screens close up (desktop monitor), or very large screens in dedicated home theater spaces. The kind that would only fit in a McMansion, anyway.

People are legit sitting 15+ feet away and thinking a 55 inch TV is good enough… Optimal viewing angles for most reasonably sized rooms require a 100+ inch TV and 4k or better.

Good to know that pretty much anything looks fine on my TV, at typical viewing distances.

Would be a more useful graph if the y axis cut off at 10, less than a quarter of what it plots.

Not sure what universe where discussing the merits of 480p at 45 ft is relevant, but it ain’t this one. If I’m sitting 8 ft away from my TV, I will notice the difference if my screen is over 60 inches, which is where a vast majority of consumers operate.

How many feet away is a computer monitor?

Or a 2-4 person home theater distance that has good fov fill?

i can confirm 4K and up add nothing for me compared to 1080p and even 720p. As long as i can recognize the images, who cares. Higher resolution just means you see more sweat, pimples, and the like.

edit: wait correction. 4K does add something to my viewing experience which is a lot of lagging due to the GPU not being able to keep up.

8k no. 4k with a 4k Blu-ray player on actual non upscaled 4k movies is fucking amazing.

I don’t know if this will age like my previous belief that PS1 had photo-realistic graphics, but I feel like 4k is the peak for TVs. I recently bought a 65" 4k TV and not only is it the clearest image I’ve ever seen, but it takes up a good chunk of my livingroom. Any larger would just look ridiculous.

Unless the average person starts using abandoned cathedrals as their livingrooms, I don’t see how larger TVs with even higher definition would even be practical. Especially if you consider we already have 8k for those who do use cathedral entertainment systems.

(Most) TVs still have a long way to go with color space and brightness. AKA HDR. Not to speak of more sane color/calibration standards to make the picture more consistent, and higher ‘standard’ framerates than 24FPS.

But yeah, 8K… I dunno about that. Seems like a massive waste. And I am a pixel peeper.

For media I highly agree. 8k doesn’t seem to add much. For computer screens I can see the purpose though as it adds more screen real estate which is hard to get enough of for some of us. I’d love to have multiple 8k screens so I can organize and spread out my work.

Are you sure about that? You likely use DPI scaling at 4K, and you’re likely limited by physical screen size unless you already use a 50” TV (which is equivalent to 4x standard 25” 1080p monitors).

8K would only help at like 65”+, which is kinda crazy for a monitor on a desk… Awesome if you can swing it, but most can’t.

I tangentially agree though. PCs can use “extra” resolution for various things like upscaling, better text rendering and such rather easily.

Truthfully I haven’t gotten a chance to use an 8k screen, so my statement is more hypothetical “I can see a possible benefit”.

I’ve used 5K some.

IMO the only ostensible benefit is for computer type stuff. It gives them more headroom to upscale content well, to avoid anti aliasing or blurry, scaled UI rendering, stuff like that. 4:1 rendering (to save power) would be quite viable too.

Another example would be editing workflows, for 1:1 pixel mapping of content while leaving plenty of room for the UI.

But for native content? Like movies?

Pointless, unless you are ridiculously close to a huge display, even if your vision is 20/20. And it’s too expensive to be worth it: I’d rather that money go into other technical aspects, easily.

The frame rate really doesn’t need to be higher. I fully understand filmmakers who balk at the idea of 48 or 60 fps movies. It really does change the feel of them and imo not in a necessarily positive way.

I respectfully disagree. Folk’s eyes are ‘used’ to 24P, but native 48 or 60 looks infinitely better, especially when stuff is filmed/produced with that in mind.

But at a bare minimum, baseline TVs should at least eliminate jitter with 24P content by default, and offer better motion clarity by moving on from LCDs, using black frame insertion or whatever.

I think you’re right but how many movies are available in UHD? Not too many I’d think. On my thrifting runs I’ve picked up 200 Blurays vs 3 UHDs. If we can map that ratio to the retail market that’s ~1% UHD content.

life changing. i love watching movies, but the experience you get from a 4k disc insane.

Bullshit, actual factual 8k and 4k look miles better than 1080. It’s the screen size that makes a difference. On a 15inch screen you might not see much difference but on a 75 inch screen the difference between 1080 and 4k is immediately noticeable. A much larger screen would have the same results with 8k.

You should publish a study

And publish it in Nature, a leading biomedical journal, and claim boldly.

With 44 inch at 2,5m

Sounds like a waste of time to do a study on something already well known.

Literally this article is about the study. Your “well-known” fact doesn’t hold up to scrutiny.

The other important detail to note is that screen size and distance to your TV also matters. The larger the TV, the more a higher resolution will offer a perceived benefit. Stretching a 1080p image across a 75-inch display, for example, won’t look as sharp as a 4K image on that size TV. As the age old saying goes, “it depends.”

literally in the article you are claiming to be correct, maybe should try reading sometime.

Yes, but you got yourself real pissy over it and have just now admitted that the one piece of criticism you had in your original comment was already addressed in the article. Obviously if we start talking about situations that are extreme outliers there will be edge cases but you’re not adding anything to the conversation by acting like you’ve found some failure that, in reality, the article already addressed.

I’m not sure you have the reading the comprehension and/or the intention to have any kind of real conversation to continue this discussion further.

It’s not my fault you can’t read.

It is your fault if you start an argument over your inability to read however.

So I have a pet theory on studies like that. There are many things out there that many of us take for granted and as givens in our daily lives. But there are likely equally as many people out there to which this knowledge is either unknown or not actually apparent. Reasoning for that can be a myriad of things; like due to a lack of experience in the given area, skepticism that their anecdotal evidence is truly correct despite appearances, and on and on.

What these “obvious thing is obvious” studies accomplish is setting a factual precedent for the people in the back. The people who are uninformed, not experienced enough, skeptical, contrarian, etc.

The studies seem wasteful upfront, but sometimes a thing needs to be said aloud to galvanize the factual evidence and give basis to the overwhelming anecdotal evidence.

It’s the screen size that makes a difference

Not by itself, the distance is extremely relevant. And at the distance a normal person sits away from a large screen, you need to get very large for 4k to matter, let alone 8k.

I like how you’re calling bullshit on a study because you feel like you know better.

Read the report, and go check the study. They note that the biggest gains in human visibility for displays comes from contrast (largest reason), brightness, and color accuracy. All of which has drastically increased over the last 15 years. Look at a really good high end 1080p monitor and a low end 4k monitor and you will actively choose the 1080p monitor. It’s more pleasing to the eye, and you don’t notice the difference in pixel size at that scale.

Sure distance plays some level of scale, but they also noted that by performing the test at the same distance with the same size. They’re controlling for a variable you aren’t even controlling for in your own comment.

This has been my experience going from 1080 to 4K. It’s not the resolution, it’s the brighter colors that make the most difference.

And that’s not releated to the resolution yet people have tied higher resolutions to better quality.

Depends how far away you are. Human eyes have limited resolution.

For a 75 inch screen I’d have to watch it from my front yard through a window.

Have a 75" display, the size is nice, but still a ways from a theater experience, would really need 95" plus.