To be fair, though, this experiment was stupid as all fuck. It was run on /r/changemyview to see if users would recognize that the comments were created by bots. The study’s authors conclude that the users didn’t recognize this. [EDIT: To clarify, the study was seeing if it could persuade the OP, but they did this in a subreddit where you aren’t allowed to call out AI. If an LLM bot gets called out as such, its persuasiveness inherently falls off a cliff.]

Except, you know, Rule 3 of commenting in that subreddit is: “Refrain from accusing OP or anyone else of being unwilling to change their view, of using ChatGPT or other AI to generate text, [emphasis not even mine] or of arguing in bad faith.”

It’s like creating a poll to find out if women in Afghanistan are okay with having their rights taken away but making sure participants have to fill it out under the supervision of Hibatullah Akhundzada. “Obviously these are all brainwashed sheep who love the regime”, happily concludes the dumbest pollster in history.

It’s like creating a poll to find out if women in Afghanistan are okay with having their rights taken away but making sure participants have to fill it out under the supervision of Hibatullah Akhundzada. “Obviously these are all brainwashed sheep who love the regime”, happily concludes the dumbest pollster in history.

I don’t particularly like this analogy, because /r/changemyview isn’t operating in a country where an occupying army was bombing weddings a few years earlier.

But this goes back to the problem at hand. People have their priors (my bots are so sick nasty that nobody can detect them / my liberal government was so woke and cool that nobody could possibly fail to love it) and then build their biases up around them like armor (any coordinated effort to expose my bots is cheating! / anyone who prefers the new government must be brainwashed!)

And the Bayesian Reasoning model fixates on the notion that there are only ever a discrete predefined series of choices and uniform biases that the participant must navigate within. No real room for nuance or relativism.

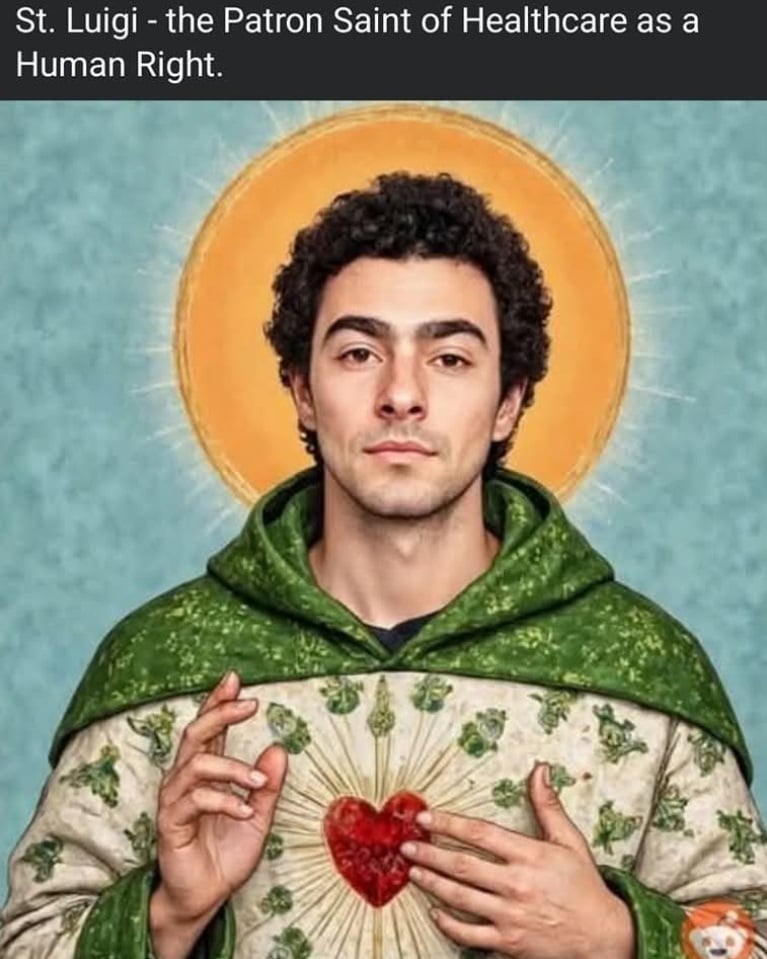

Deleted by moderator because you upvoted a Luigi meme a decade ago

…don’t mind me, just trying to make the reddit experience complete for you…

that’s funny.

I had several of my Luigi posts and comments removed – on Lemmy. let’s see if it still holds true.

Well then, as lemmy’s self-designated High Corvid of Progressivity, I extend to you the traditional Fediversal blessing of:

remember kids:

A place in heaven is reserved for those who speak truth to power

Lemmy is a collection of different instances with different administrators, moderators, and rules.

this was Lemmy.world that did it.

last I knew anything that had the word “Luigi” in the meme was blocked.

Err, yeah, I get the meme and it’s quite true in its own way…

BUT… This research team REALLY need an ethics committee. A heavy handed one.

As much as I want to hate the researchers for this, how are you going to ethically test whether you can manipulate people without… manipulating people. And isn’t there an argument to be made for harm reduction? I mean, this stuff is already going on. Do we just ignore it or only test it in sanitized environments that won’t really apply to the real world?

I dunno, mostly just shooting the shit, but I think there is an argument to be made that this kind of research and it’s results are more valuable than the potential harm. Tho the way this particular research team went about it, including changing the study fundamentally without further approval, does pose problems.

from what I remember from my early psych class, manipulation can be used, but should be used carefully in an experiment.

there’s a lot that goes into designing a research experiment that tests or requires the use of manipulation, as appropriate approvals and ethics reviews are needed.

and usually it should be done in a “controlled” environment where there’s some manner of consent and compensation.

I have not read the details done here but the research does not seem to happen in a controlled env, participants had no way to express consent to opt in or opt out, and afaik they were not compensated.

any psych or social sci peeps, feel free to jump in to correct me if I say something wrong.

on a side note, another thing that this meme suggests is that both of these situations are somehow equal. IMO, they are not. researchers and academics should be expected to uphold code of ethics more so than corporations.

Tutoring psych right now - another big thing is the debrief.

It needs to be something you can’t do without lying, something important enough to be worth lying about, and you must debrief the participants at the end. I really doubt the researchers went back and messaged every single person that interacted with them revealing the lie.

That story is crazy and very believable. I 100% believe that AI bots are out there astroturfing opinions on reddit and elsewhere.

I’m unsure if that’s better or worse than real people doing it, as has been the case for a while.

Belief doesn’t even have to factor; it’s a plain-as-day truth. The sooner we collectively accept this fact, the sooner we change this shit for the better. Get on board, citizen. It’s better over here.

I worry that it’s only better here right now because we’re small and not a target. The worst we seem to get are the occasional spam bots. How are we realistically going to identify LLMs that have been trained on reddit data?

Honestly? I’m no expert and have no actionable ideas in that direction, but I certainly hope we’re able to work together as a species to overcome the unchecked greed of a few parasites at the top. #LuigiDidNothingWrong

So they banned the people that successfully registered a bunch of AI bots and had them fly under the mods radar. I’m sure they’re devastated and will never be able to get on the site again…

Manipulating users with AI bots to research what, exactly.

Researching what!!!

After all, it’s all about con$$ent, eh?

Fuck reddit and its inane smarmy rules

Insert same picture meme